Alexnet input 224?227

Published:

0.结论

- 224的输入配套会给一个

padding=2, 227的输入只有kernel=11,stride=4,整体计算得到第一个卷积层的特征输出都是55,这里没有区别。- pytorch的实现和caffe原版的实现第一和第二个卷积层的输出通道数不同,但是整体参数量没有很大变化,都是6kw左右。

1.问题

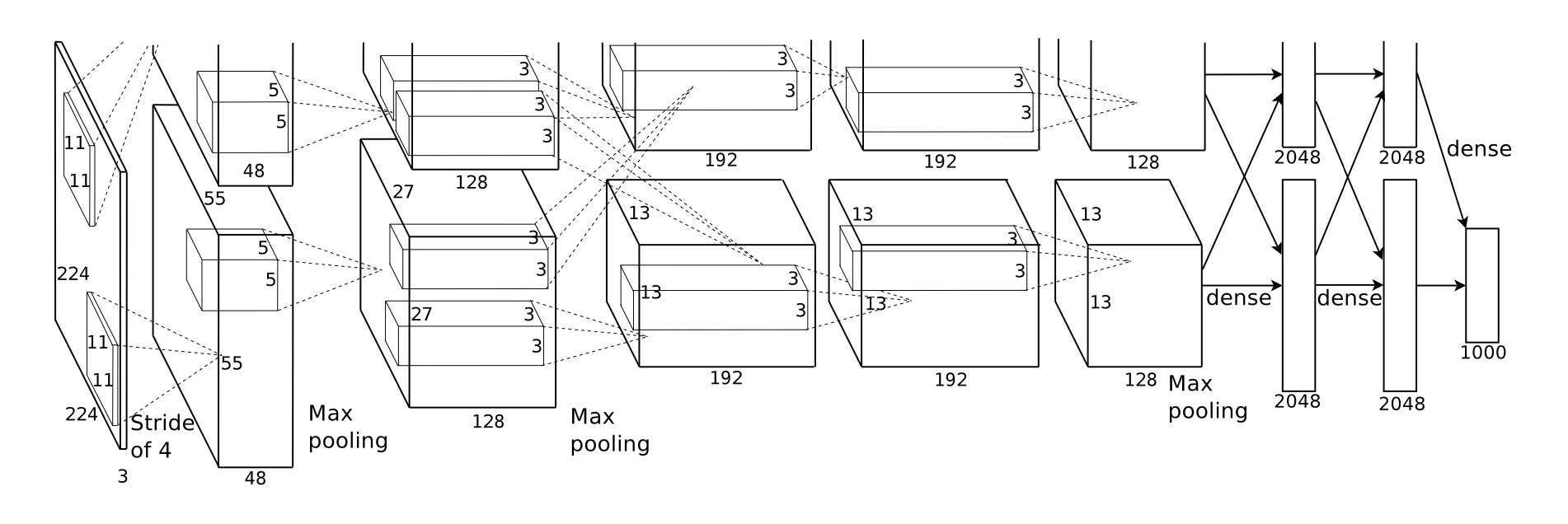

在Alexnet的论文中,结构图上给的输入是224,但是如果按照224去计算,得到的下一次的输出不是55,即:

\[\begin{aligned} (224-11)/4+1&=&54.25 \\ (227-11)/4+1&=&55 \end{aligned}\]而Alexnet训练时的数据处理步骤为:

- 下采样得到$256\times 256$的图像

- 从256的图像中crop出224的图像

因此有人认为,在真正送入网络之前,可能加了3个像素的padding,得到227

2. 解决问题

2.1 文字描述

类似的言论在cs231的资料中也有提及

The Krizhevsky et al. architecture that won the ImageNet challenge in 2012 accepted images of size [227x227x3]

2.2 代码层面

在AlexNet的Caffe实现中,可以看到使用的图像尺寸其实是227

name: "AlexNet"

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

不过,在pytorch或者是mmpretrain的Alexnet实现中,使用的都是224

# pytorch

[docs]class AlexNet_Weights(WeightsEnum):

IMAGENET1K_V1 = Weights(

url="https://download.pytorch.org/models/alexnet-owt-7be5be79.pth",

transforms=partial(ImageClassification, crop_size=224),

# mmpretrain

@MODELS.register_module()

class AlexNet(BaseBackbone):

"""`AlexNet <https://en.wikipedia.org/wiki/AlexNet>`_ backbone.

The input for AlexNet is a 224x224 RGB image.

Args:

num_classes (int): number of classes for classification.

The default value is -1, which uses the backbone as

a feature extractor without the top classifier.

"""

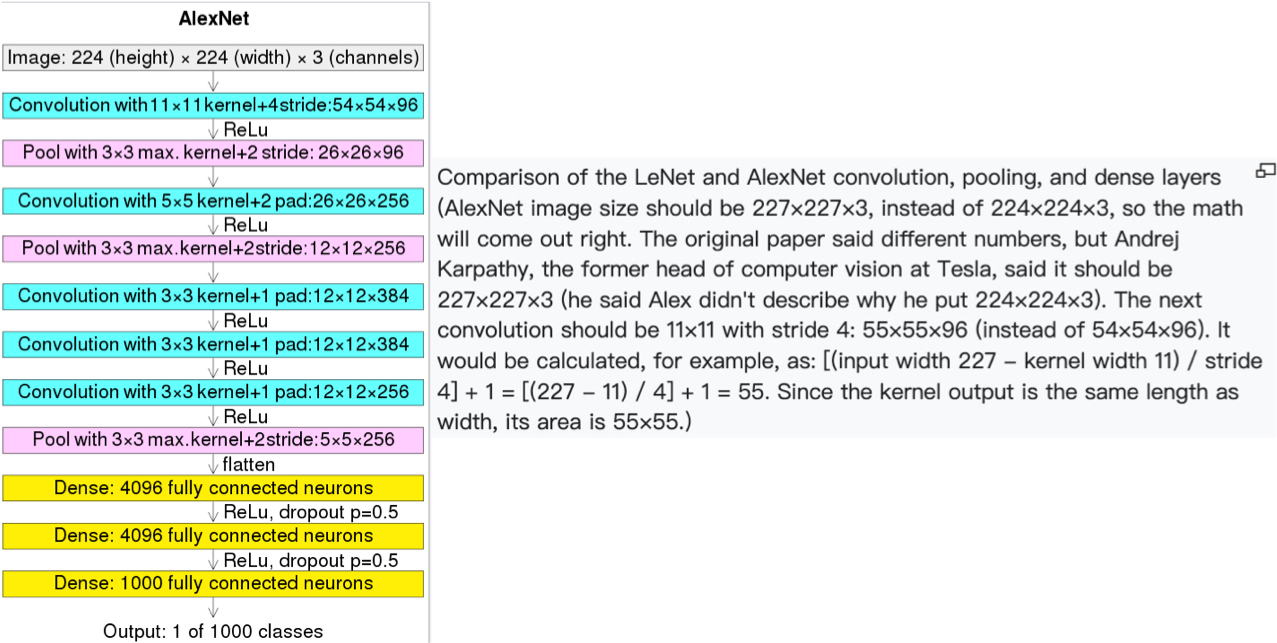

2.3 手动计算比较

以224为输入(pytorch等实现)

-------------------------------------------------------------------------------------------------------------------------

Layer (type) Output Shape Param # kernel

=========================================================================================================================

Conv2d-1 [-1, 64, 55, 55] 3*11*11*64+64=23,296 k=11,s=4,p=2 (224+4-11)/4+1=55.25

ReLU-2 [-1, 64, 55, 55] 0

MaxPool2d-3 [-1, 64, 27, 27] 0 k=3, s=2, (55-3)/2+1=27

Conv2d-4 [-1, 192, 27, 27] 64*5*5*192+192=307,392 k=5, p=2, (27+4-5)+1=27

ReLU-5 [-1, 192, 27, 27] 0

MaxPool2d-6 [-1, 192, 13, 13] 0 k=3, s=2, (27-3)/2+1=13

Conv2d-7 [-1, 384, 13, 13] 192*3*3*384+384=663,936 k=3, p=1, (13-3+2)+1=13

ReLU-8 [-1, 384, 13, 13] 0

Conv2d-9 [-1, 256, 13, 13] 384*3*3*256+256=884,992 k=3, p=1, (13-3+2)+1=13

ReLU-10 [-1, 256, 13, 13] 0

Conv2d-11 [-1, 256, 13, 13] 256*3*3*256+256=590,080 k=3, p=1, (13-3+2)+1=13

ReLU-12 [-1, 256, 13, 13] 0

MaxPool2d-13 [-1, 256, 6, 6] 0 k=3, s=2, (13-3)/2+1=6

AdaptiveAvgPool2d-14 [-1, 256, 6, 6] 0 可能k=3, p=1,(6-3+2)+1=6

Dropout-15 [-1, 9216] 0

Linear-16 [-1, 4096] (256*6*6)9216*4096+4096=37,752,832

ReLU-17 [-1, 4096] 0

Dropout-18 [-1, 4096] 0

Linear-19 [-1, 4096] 4096*4096+4096=16,781,312

ReLU-20 [-1, 4096] 0

Linear-21 [-1, 1000] 4096*1000+1000=4,097,000

===========================================================================================================================

Total params: 61,100,840

以227为输入(Alexnet原论文,caffe实现)

------------------------------------------------------------------------------------------------------------------------

Layer (type) Output Shape Param # kernel

=========================================================================================================================

Conv2d-1 🔝[-1, 96, 55, 55] 3*11*11*96+96=34,944 k=11,s=4, (227-11)/4+1=55

ReLU-2 [-1, 96, 55, 55] 0

MaxPool2d-3 [-1, 96, 27, 27] 0 k=3, s=2, (55-3)/2+1=27

Conv2d-4 🔝[-1, 256, 27, 27] 96*5*5*256+256=614,656 k=5, p=2, (27+4-5)+1=27

ReLU-5 [-1, 256, 27, 27] 0

MaxPool2d-6 [-1, 256, 13, 13] 0 k=3, s=2, (27-3)/2+1=13

Conv2d-7 [-1, 384, 13, 13] 256*3*3*384+384=885,120 k=3, p=1, (13-3+2)+1=13

ReLU-8 [-1, 384, 13, 13] 0

Conv2d-9 🔝[-1, 384, 13, 13] 384*3*3*384+384=1,327,488 k=3, p=1, (13-3+2)+1=13

ReLU-10 [-1, 384, 13, 13] 0

Conv2d-11 [-1, 256, 13, 13] 384*3*3*256+256=884,992 k=3, p=1, (13-3+2)+1=13

ReLU-12 [-1, 256, 13, 13] 0

MaxPool2d-13 [-1, 256, 6, 6] 0 k=3, s=2, (13-3)/2+1=6

AdaptiveAvgPool2d-14 [-1, 256, 6, 6] 0

Dropout-15 [-1, 9216] 0

Linear-16 [-1, 4096] 37,752,832

ReLU-17 [-1, 4096] 0

Dropout-18 [-1, 4096] 0

Linear-19 [-1, 4096] 16,781,312

ReLU-20 [-1, 4096] 0

Linear-21 [-1, 1000] 4,097,000

===========================================================================================================================

Total params: 62,368,344

可以看到,

- 主要是前两个卷积层部分的输出通道数不一致,

- 但是整体参数量都是6kw左右,参数量并没有发生很大变化,

- caffe实现的Alexnet看起来结构更对称一些。